Trace

Trace is a wrist-worn assistive device that uses computer vision to recognize, locate, and spatially map everyday objects - all retrievable via voice activation.

A project by Isidora Mack, Yenning Hou, and Renan Teuman.

Harvard University, 2025

SKILLS DEVELOPED

-

Computer Vision & Machine Learning

-

Developing a product end to end

-

Human-Centered System Design

BACKGROUND

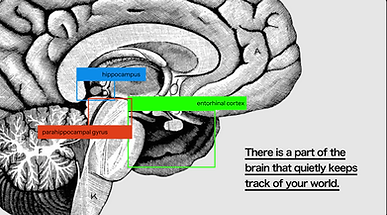

Remembering where everyday objects are is not a passive mental skill—it is supported by a specialized spatial-memory network in the brain, including the hippocampus, entorhinal cortex, and parahippocampal gyrus. This system allows us to encode and retrieve object locations within our environment. Crucially, it is one of the earliest neural systems to decline, often before a formal diagnosis of dementia, in a stage known as Mild Cognitive Impairment (MCI). As this decline begins, people increasingly misplace objects and struggle to form new spatial memories.

While these lapses may appear minor, their cumulative effect is profound. Persistent uncertainty about where essential items are erodes confidence, independence, and a person’s sense of control over daily life. Individuals remain functionally independent, yet experience measurable cognitive changes. As the global population ages rapidly, the number of people living with early cognitive decline is growing at an unprecedented scale.

The impact extends far beyond the individual. In the United States alone, millions of unpaid caregivers support loved ones with cognitive decline, contributing billions of hours of invisible labor each year. This emotional, physical, and economic burden underscores a critical gap: early-stage cognitive support tools that preserve autonomy, reduce daily friction, and ease the caregiving load before full dependency emerges. Trace was designed in response to this gap, focusing on spatial memory support at the earliest point of decline, when intervention can have the greatest impact.

SOLUTION

Trace has 3 main components:

-

Object Logging - This foundational layer passively localizes objects within a physical environment, associating them with rooms, coordinates, and timestamps. The result is a persistent spatial map of where objects exist and how they move over time.

-

Query + Response - Interaction between the user and trace. Object-level spatial data is structured into routines and temporal patterns—how often objects are used, where they are typically placed, and how these patterns change.

-

Data Analytics - The top layer synthesizes long-term object logs and user query behavior to infer cognitive state.

Our "works-like" prototype next to the "looks-like" device resting in its charging station.

The Trace device modeled on a user in context.

Trace is a wrist-worn device designed to support spatial memory while prioritizing privacy, comfort, and cognitive simplicity. The hardware integrates a compact camera, processor, speaker, microphone, IMU, EMG sensors, LED indicator, and battery module within a soft, silicone-encased form factor. IMU and EMG sensing detect intentional movement and gestures, which in turn trigger the camera—ensuring the device remains passive by default.

Privacy and user agency are central to the physical design. The camera activates only through deliberate EMG gestures, never captures the user’s face, and does not store raw image data. A subtle LED indicator provides immediate visual feedback when sensing is active, reinforcing transparency and trust.

The device is optimized for physical comfort and accessibility. The soft silicone cuff accommodates sensitive skin, while the slip-on form factor and magnetic charging dock support users with reduced dexterity. Interaction is intentionally minimal: there is no screen or complex interface. Users engage with Trace through natural voice queries, allowing the device to remain cognitively lightweight while seamlessly integrating into daily routines.

TECHNICAL PIPELINE

Object detection technical diagram.

Object Detection and Localization

Trace implements a wearable perception and mapping pipeline inspired by robotic localization systems. On-device object detection is performed using YOLOv8 to identify everyday items and extract 2D image coordinates. These detections are paired with monocular depth estimation (Depth Anything model) to recover relative 3D object positions from a single camera.

To establish a global reference frame, Trace uses COLMAP to perform visual feature matching and camera pose estimation across images captured throughout the environment, producing a sparse 3D map. This map is refined into a dense, surface-aware representation using Neural Radiance Fields (NeRFs), enabling accurate relocalization of new camera frames.

By combining object detection, depth estimation, and persistent 3D mapping, Trace logs object interactions directly in 3D space and tracks user movement and routines over time—functionally operating as a wearable SLAM-like system for everyday environments.

View from the trace camera with detected objects.

Depth estimation from the Depth anything model.

Left shows the image from the camera and right shows the camera pose estimation within the room's coordinate system.

Adaptive Response Model

Trace incorporates an adaptive response model inspired by how human caregivers interpret and respond to memory-related questions. Rather than treating every query as a simple object retrieval task, the system analyzes patterns of interaction over time. Repeated questions within short intervals or queries that lack a clear object reference are interpreted as potential signals of anxiety or temporal disorientation. In these cases, Trace dynamically adjusts its response—shifting tone and strategy to better support the user instead of repeatedly returning the same factual answer.

Adaptive response model technical diagram.

Data Analytics: Spatial Entropy & Cognitive Drift

Trace translates everyday object interactions into quantitative signals by analyzing how object placement patterns evolve over time and space. We compute object entropy as a measure of spatial disorganization, capturing how consistently an object appears in its expected room versus being dispersed across the environment. Rising entropy reflects increasing irregularity in object–room relationships and serves as an early indicator of cognitive drift.

These temporal signals are paired with spatial visualizations that map entropy directly onto the user’s floor plan. By comparing heat maps across weeks, caregivers can see not only that routines are changing, but where disorganization emerges—such as objects increasingly appearing outside their typical rooms. In parallel, Trace tracks routine stability and drift trends over time, summarizing changes through interpretable indices that surface gradual cognitive shifts long before acute failure occurs. These visualizations below are conceptual and based on synthetic data, designed to demonstrate how a trace’s analytics could be interpreted by caregivers rather than a fully implemented application.

Together, these analytics transform passive sensing into actionable insight, enabling early, environment-grounded understanding of cognitive change rather than reactive intervention.

Entropy computed over the distribution of rooms an object appears in; higher values indicate less consistent placement.

TAKEAWAYS

Trace explores how spatial sensing, wearable hardware, and longitudinal analytics can work together to support memory in everyday life. Rather than diagnosing cognitive decline, the system focuses on making subtle changes in routine and behavior visible earlier and more concretely—at a scale that traditional assessments often miss. This project demonstrates my approach to building human-centered technical systems: grounding advanced perception and mapping techniques in real-world constraints, ethics, and care. Trace ultimately serves as a foundation for future work at the intersection of robotics, sensing, and health-supportive technology.

Object placement heatmap. As objects are placed outside their typical room, the heatmap becomes more dispersed.